Scroll Down

WHAT WE DO

เสริมศักยภาพให้ธุรกิจก้าวข้ามขีดจำกัด ด้วยนวัตกรรมที่สร้างผลลัพธ์ทางธุรกิจได้จริง

จัดทำแผนการเติบโตธุรกิจกับ Bluebik ในโครงการ JUMP+ ของตลาดหลักทรัพย์แห่งประเทศไทย (SET)

11 กันยายน 2568

BBIK โชว์ฟอร์มแกร่งกำกับดูแลกิจการตามหลักบรรษัทภิบาลคว้า 100 คะแนนเต็ม AGM Checklist 2025 ต่อเนื่องเป็นปีที่ 4

1 กันยายน 2568

บลูบิค ปั้นสุดยอดเยาวชนสู่ผู้นำแห่งอนาคต ผ่านโครงการ ‘Boost: The Transformation Program’ ขับเคลื่อนและสร้างการเปลี่ยนแปลงให้ประเทศ

21 สิงหาคม 2568

บลูบิค โชว์ผลงานกำไรสุทธิ 1H/68 โต 38% แตะ 146 ล้านบาท มั่นใจครึ่งปีหลังโตแกร่ง หลังทิศทางเศรษฐกิจชัดเจน หนุนการปรับใช้เทคฯ ของภาคธุรกิจกลับมาดำเนินการตามแผน

13 สิงหาคม 2568

บลูบิค ประกาศความสำเร็จย้ายเข้า SET เปิดกลยุทธ์ยกระดับบริการและกระบวนการทำงาน พร้อมเดินหน้าขยายตัวระยะยาว สร้างการเติบโตท่ามกลางเศรษฐกิจผันผวน

22 กรกฎาคม 2568

ความประทับใจจากลูกค้า

All voices

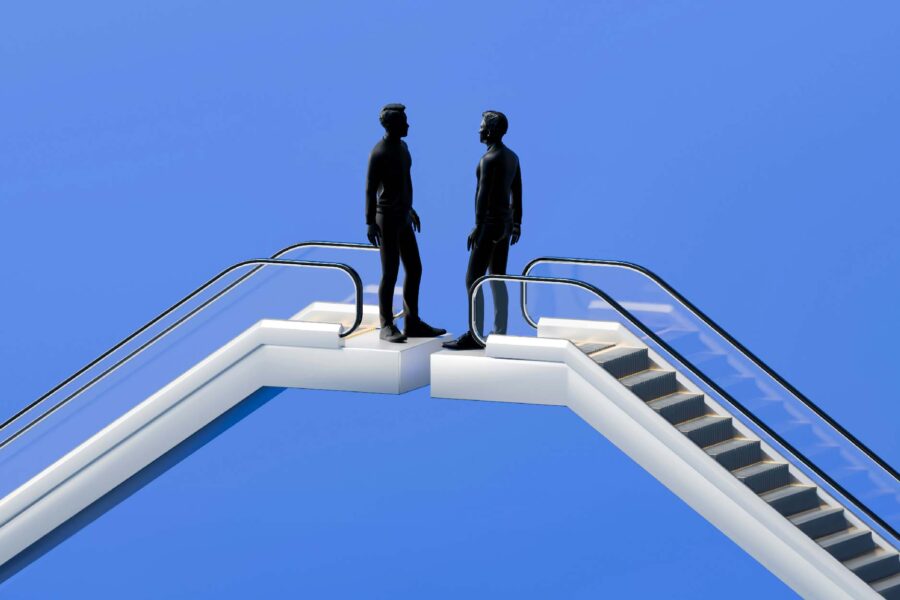

JOIN THE TEAM THAT DOES THINGS DIFFERENTLY.

เพราะคนคือหัวใจสำคัญ เราจึงมุ่งสร้างและดูแลความสัมพันธ์ที่ดี ทั้งกับลูกค้าและกับเพื่อนร่วมงาน

ร่วมงานกับเรา

![Thumbnail TH [AI Led] Enterprise Trans.#4 Insurance](https://bluebik.com/wp-content/uploads/2025/10/Thumbnail-TH-AI-Led-Enterprise-Trans.4-Insurance.png)